Artificial intelligence (AI) has become essential in healthcare, particularly for diagnosis. AI is already a great ally of doctors for identifying breast cancer and those of the skin, among others. But these computer models are often much too specialized, difficult to adapt to other diseases. However, the arrival of new AIs called foundation models could change the situation. These models are trained with a wide variety of data, which makes them very versatile like ChatGPT and other major language models.

Researchers from Lehigh University, Massachusetts General Hospital and Harvard University (USA) have created BiomedGPT, a foundation model trained exclusively with medical data, which allows for the accurate diagnosis of a large number of diseases. The scientists presented it in the journal Nature Medicine.

A tailor-made AI

ChatGPT, Llama, Gemini… All these AIs are very powerful thanks to the amount of information they have analyzed. However, they are not very reliable when it comes to medicine. They do not have access to much medical data, because most of it is not published on the internet to protect patients' privacy, explains Lichao Sun, professor of computer science at Lehigh University and creator of BiomedGPT. As a result, they do not necessarily have good results in medical diagnosis. »

His model, on the other hand, is trained solely on medical data, including nearly 600,000 medical images and nearly 200 million written medical sentences. Which seems enormous, but it is nothing compared to the almost infinite amount that ChatGPT and other language models have ingested by analyzing everything they could find on the internet. As a result, BiomedGPT is much smaller, but much more precise. And in addition the data remains on site, we do not have to send it to an external server as would be the case if we used ChatGPT, he continues. Hospitals want to be able to process their medical data autonomously. The only way to achieve this was to create and train a model of their own."

Read alsoWhy generative AI is prone to lying

Small size, but high precision

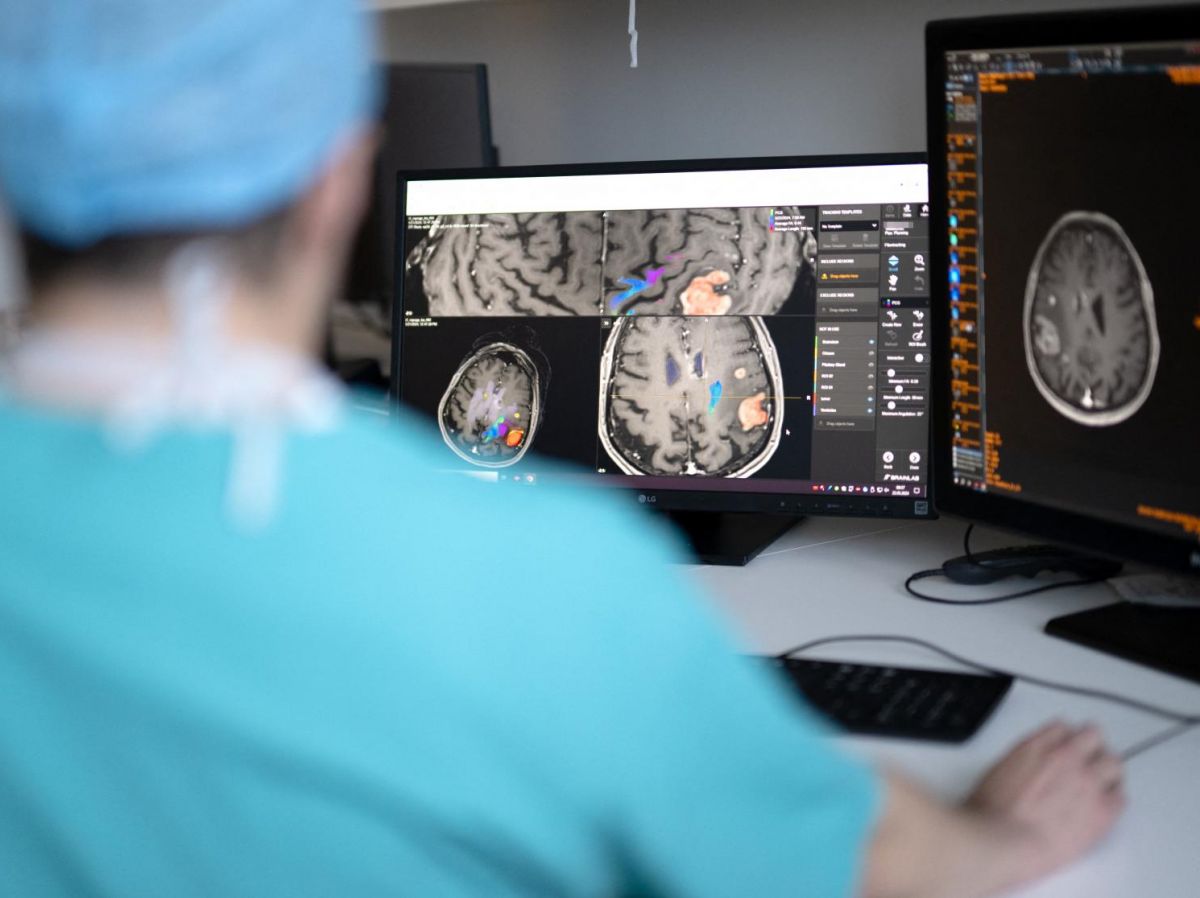

Some major language models have been designed specifically for medicine. This is the case, for example, of Med-PaLM, developed by Google. Its multimodal version analyzes text, but also images, and its most robust version has 562 billion parameters! BiomedGPT is 3,000 times smaller, but still does just as well. The researchers tested their model on 25 different tasks, including the analysis of medical radiologies, and its results were excellent in the majority of them. And it did as well as real experts in writing medical reports.

Read alsoChatGPT: Can Generative AI Replace an Emergency Room Doctor? Researchers Have Tested It

A model that can be easily installed anywhere

“ The model was evaluated on data from Massachusetts General Hospital with good results, confirms Xiang Li, a professor of radiology at the hospital and Harvard University. We are now looking at how it could be integrated into the hospital's operations, which will be facilitated by its small size." Because its lightness allows it to be installed on small computers, unlike large commercial language models which require an Internet connection and huge computer servers. This will allow, for example, its installation on equipment such as ultrasound machines., he continues. And we will not depend on the internet, because a bad connection can cause delays, and in medicine, any delay can be serious.. »