In October 2024, the Cybathlon presented the assistant robots : robotic arms attached to wheelchairs that allow paralyzed people to perform daily tasks they could no longer perform due to their disability. These robots were controlled either manually or by voice, with or without the use of artificial intelligence. A new approach could make this control more natural, giving the user the ability to guide their robot solely by thought. Researchers at the University of California, San Francisco, presented in the journal Cell, On March 6, 2025, a brain implant that decodes the user's intention and transforms it into commands for the robotic arm could facilitate the adoption of these assistant robots, thus giving greater autonomy to paralyzed people.

A difficult approach to implement

Other thought-controlled robotic arms had already been tested in the past. But their use was occasional and did not last more than a few days, due to the difficulty in correctly decoding brain activity consistently over time. Indeed, previous studies have shown that this activity can shift slightly from one day to the next, micromovements that are nevertheless sufficient to disrupt the decoding of information.

A dance between user thinking and AI

To better understand this drift phenomenon that blocked the long-term use of these brain-machine interfaces, the researchers analyzed the brain activity of two quadriplegics over several weeks. The interface used was placed on the motor cortex where, using its 128 electrodes, it captured neural activity when the user imagined moving their right hand. Then, an artificial intelligence decoded this information and used it to guide a virtual hand, displayed on a computer screen.

Each time the person imagined making the movement in question, brain activity could change very slightly, probably reflecting the brain's neural plasticity. But seeing the action produced by their thought on screen allowed participants to refine their brain commands, which then helped the AI learn to decode them better.This combined learning between humans and AI is the next step for these brain-machine interfaces.”, enthuses neurologist Karunesh Ganguly, author of the study, in a press release.

AI can adapt to changes in brain activity over time

These changes in neural activity during movement varied from day to day. The activity pattern remained the same, but it shifted slightly, like a boat drifting in the sea. This disrupted decoding, reducing the interface's ability to accurately interpret the user's thoughts. The authors believe this drift in neural activity is particularly pronounced in paralyzed people, who cannot see the action triggered by their thoughts and therefore cannot refine them.

But the AI could be trained to detect this drift by analyzing how activity had shifted over the previous days. In this way, the device could refine itself, correctly decoding the participant's thoughts.

Credit: UCSF

A robotic arm can thus be controlled correctly over the long term.

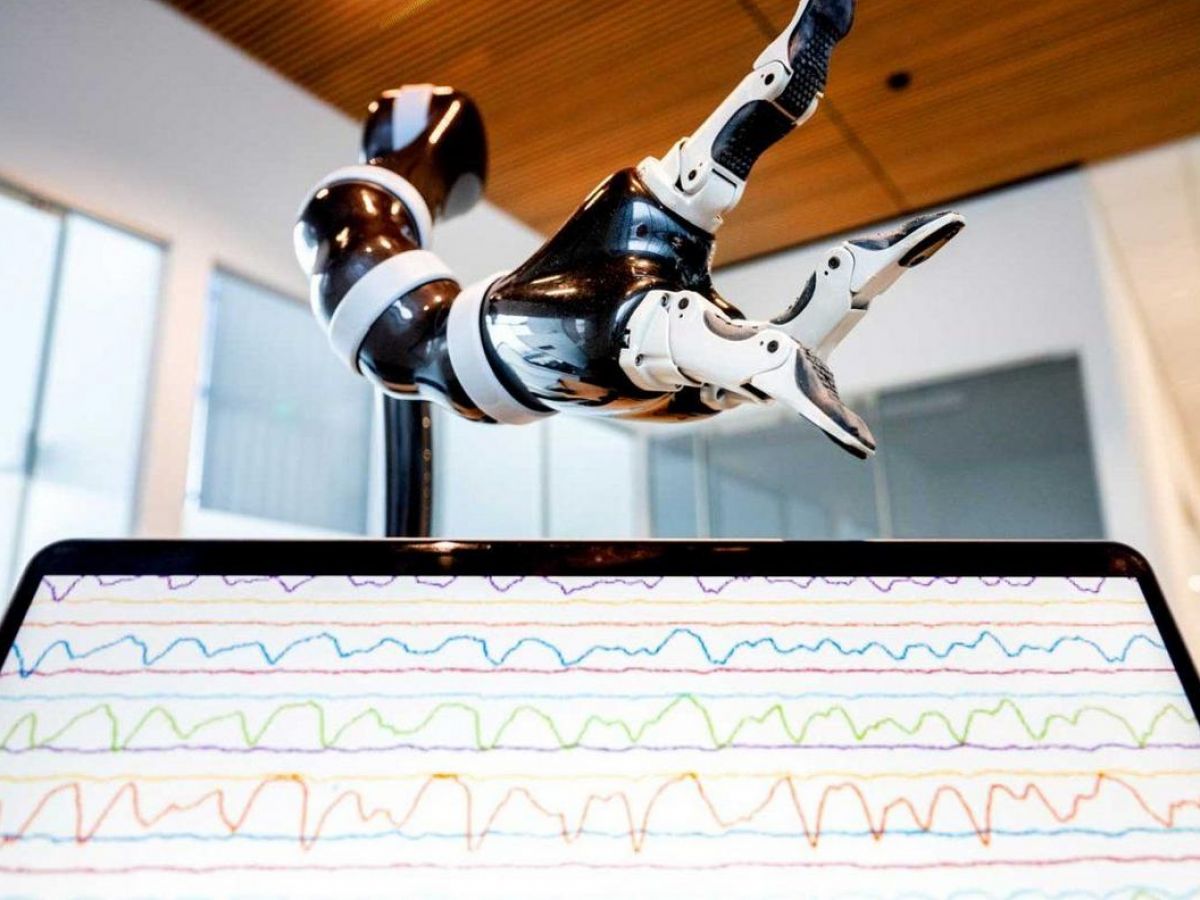

The authors then tested the interface with a robotic arm attached to a table a few meters away from the user. The user then had to use it to pick up objects, manipulate them, and place them in a specific location. The participants completed these exercises with a success rate of 90%, but they lost efficiency after a month. However, rapid recalibration of the artificial intelligence model allowed the accuracy lost over time to be regained.

With this approach, it would therefore be possible to use these brain-machine interfaces to control these robots in the long term. The authors predict that adding cameras to the robot (like the Cybathlon winner had) could further improve the performance of this device. This is because the AI could understand the arm's environment and the object to be grasped, taking over some of the thinking. This is particularly true for repetitive or more complex tasks. These are encouraging prospects that would make assistant robots easier to use. And therefore more useful.