It was found that one in three victims of deepfake pornography, created by combining a photo of a user's face taken by an acquaintance or a social networking service (SNS) with pornography, were minors.

According to the Korea Women's Human Rights Agency under the Ministry of Gender Equality and Family, as of the 28th, 288 (36.9%) of the 781 people who sought assistance for damages caused by deepfakes from the Digital Support Center for Victims of Sexual Crimes (Diseong Center) from January 1 to August 25 this year were minors. The number of minors seeking assistance for damages caused by deepfake pornography increased from 64 in 2022 to 288 as of the 25th of this year, and the number of victims has already increased 4.5 times in two years.

During the same period, the total number of victims seeking assistance, regardless of age, increased 3.7-fold, from 212 to 781. The number of minor victims is increasing faster than the overall number.

An official from the Disung Center said, “The reason why the associated harm was high in younger age groups, such as teenagers and those in their 20s, is that they are relatively more familiar with online communication and building relationships using SNS than other age groups,” and added, “The recent deepfake technology has “As generative artificial intelligence (AI) develops and the emergence of generative artificial intelligence (AI), the technology that can easily produce illegal videos is spreading, and the harm that comes from it is also increasing,” he analyzed.

These damages caused by deepfake pornography were first reported in universities, and cases of damage are confirmed in schools across the country. Education authorities are belatedly emphasizing to students that deepfake pornography is a “sex crime” and asking them to exercise caution before disclosing their personal information. The Ministry of Education has sent an official letter to 17 municipal and provincial education offices across the country, requesting their cooperation in providing education to respond to and prevent digital sex crimes, and is also investigating the status of victims and victims.

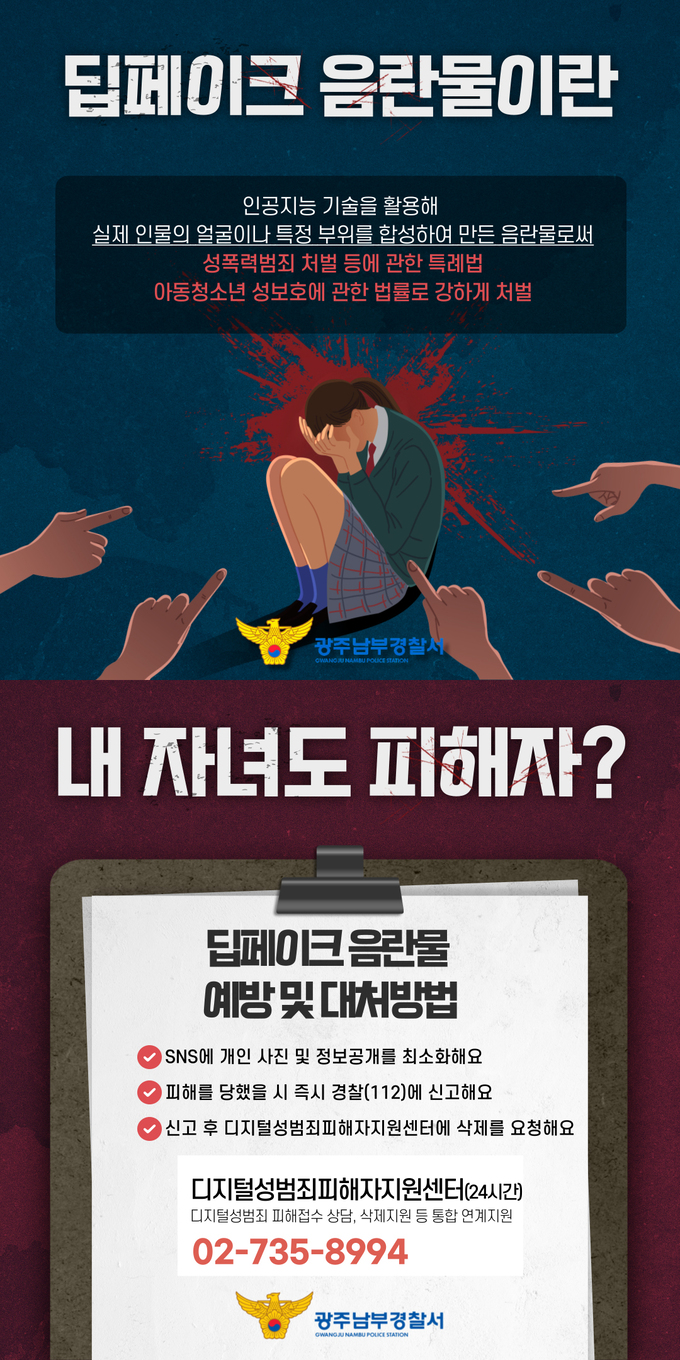

Under current law, if the victim of DeFake's sexual exploitation is a child or adolescent under the age of 19, Article 11 of the Child and Adolescent Sexual Exploitation Act (production and distribution of child and adolescent sexual exploitation material, etc.) applies, and possessing or viewing the video in question will result in a one-year sentence. If you produce or distribute it, you will be sentenced to a minimum of 3 years in prison and a maximum of life in prison.

If a photo of herself stolen without permission has been synthesized and distributed in a form that can cause sexual humiliation, the victim can seek advice from the Discord Center. You can seek advice on damage assistance by phone (☎ 02-735-8994), open 365 days a year, or via the online bulletin board (d4u.stop.or.kr).

Reporter Park Yu-bin yb@segye.com

[ⓒ세계일보 & Segye.com, 무단전재및재배포금지]